Distributed Erlang

This document is a short guide to the structure and internals of the Erlang/OTP distributed messaging facility.

The intended audience is Erlang programmers with experience using distributed Erlang and an interest in its implementation.

Overview

The protocol Erlang nodes use to communicate is described between the distribution protocol and external term format sections of the ERTS user guide.

The implementation is supported by features of the system applications, libraries, and the BEAM emulator.

Sections below describe process structure, flow of control, and points of

contact between subsystems, with links to the source code at a recent commit

hash on the maint

branch.

Transport

The distributed protocol typically uses a single TCP socket over IPv4. The protocol used can be specified with the proto_dist argument to the VM, and is respected by the net_kernel module when it sets up either its own distributed listener or connects to remote nodes.

To find the protocol modules specified by proto_dist, add _dist.erl in the

Erlang/OTP source.

- inet_tcp, the default; handles TCP streams with IPv4 addressing.

- inet6_tcp,

an undocumented module prepared to handle TCP with IPv6 addressing. In

principle there’s no reason

inet_tcpcould not be protocol-agnostic in this respect, but for now programmers setting up nodes on IPv6 networks need to specify-proto_dist inet6_tcp. - inet_tls, connection using TLS to verify clients and encrypt internode traffic. Setup is detailed in the Secure Socket Layer User’s Guide. Note that particularly within Erlang’s usual LAN deployment context, other options such as an internode VPN might be more convenient.

As well as transport negotation, the protocol modules handle node registration and lookup, so a particular transport could use something other than epmd if required.

Discovery

Before applications on the network can talk, they need to locate one another with some form of service discovery. Erlang/OTP relies on both DNS and a portmap-style service called epmd (for “Erlang Port Mapping Daemon”).

epmd is a simple standalone daemon written in

C which listens at port

4369 on a host running Erlang nodes. It implements a simple binary protocol to

register and look up (Erlang node, TCP port) mappings. See the links from

the overview above for details.

The Erlang system applications interact with epmd via the erl_epmd

module.

Notes:

- An Erlang node starts

epmdautomatically if it is not already running on the host at startup. This is built in to the low-levelerlexecC program used byerlto invoke the emulator. - Each node registering itself with

epmdmaintains its open TCP connection after sendingALIVE2_REQ;epmdderegisters the node when that connection is closed or lost. - The

Extrafield ofALIVE2_REQis faithfully stored and echoed back byepmdinPORT2_RESPresponses, but is not currently used. - Both

NameandExtracan have a maximum size of MAXSYMLEN.

System

Setup and maintenance of distributed Erlang listening and connection is handled by the kernel application’s net_kernel module.

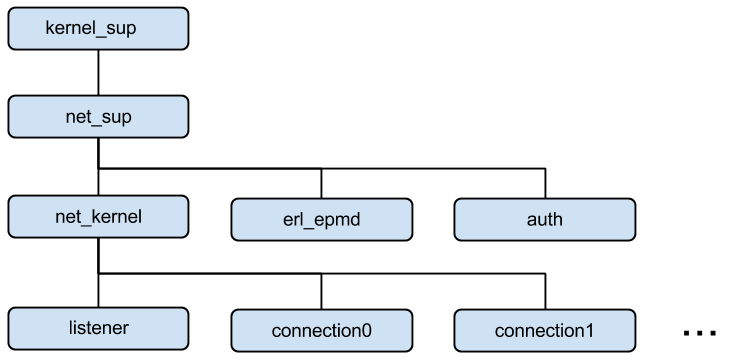

The process hierarchy:

net_kernel is started by the net_sup

supervisor,

if it can find -sname or -name arguments on the erl commandline. Started

alongside it are

erl_epmd

and auth.

All three are gen_servers. The latter two respectively handle maintaining a

connection to epmd, as discussed above, and

cookie

management (including the backend for erlang:{set,get}_cookie).

net_kernel immediately sets up a listening socket for the node by invoking

Mod:listen/1 for the proto_dist module (by default

inet_tcp_dist).

Note the facility to supply listen

options

for distributed Erlang sockets via the commandline.

After registering this as the node’s distribution socket with the emulator (via

erlang:setnode/2, see below), net_kernel invokes Mod:accept/1, e.g. for

inet_tcp_dist.

By default, this creates the listener process in the diagram above, running

inet_tcp_dist:accept_loop/2.

After this, there are two cases for net_kernel to deal with:

-

An incoming connection from another node. This triggers a call to the proto_dist

Mod:accept_connection/5which in turn starts one of theconnectionNprocesses in the diagram above and performs the distributed Erlang handshake using the dist_util module. On a successful handshake,dist_util:do_setnode/1registers the newly connected node with the emulator (viaerlang:setnode/3, see below). -

An outgoing connection to another node. This is handled by

net_kernel:connect/1, which is called transparently by thed*functions in the erlang module (see the emulator section below).net_kernel:connect/1does much the same work as for an incoming connection (including setting up one of theconnectionNprocesses in the diagram above), but viaMod:setup/5in theproto_distmodule.

Finally, note that net_kernel can also be

invoked

as part of the

erlang:spawn/4 to spawn

processes on a foreign node.

Emulator

While the Erlang system, via net_kernel, handles connection setup, the nuts

and bolts of the distributed Erlang protocol are handled by the emulator

itself. The API it exposes is small, however, and mostly found in the built-in

functions registered by

dist.c.

First up are the setnode BIFs. These register either the local node

(erlang:setnode/2) or remote nodes (erlang:setnode/3) in the emulator’s

node

tables.

There are separate distribution tables for visible and hidden nodes; this is

how the -hidden node commandline argument is used. The erlang:nodes/0 BIF

reads these tables directly.

BIFs like

erlang:send/2

look up the node tables and call into the erts_dsig_*

functions

to handle distributed messaging. If a connection is necessary, these can trap

back to e.g.

erlang:dsend/2

to invoke connection setup via net_kernel.

Once connections are established, erts_dsig_send_* are used to do the actual messaging. Message encoding in the external term format is handled by dsig_send.

Updates

Please send any comments or suggestions for this document to cian@emauton.org.